OPC DA trigger node

OPC DA Trigger Node

Overview

The OPC DA Trigger Node automatically initiates MaestroHub pipelines when OPC DA item values change. Unlike polling-based approaches, this trigger provides real-time event-driven automation with intelligent debouncing—multiple rapid item changes within 100ms are aggregated into a single pipeline execution with the latest values for all affected items.

Core Functionality

What It Does

OPC DA Trigger enables real-time, event-driven pipeline execution by:

1. Event-Driven Pipeline Execution

Start pipelines automatically when OPC DA item values change, without manual intervention or polling. Perfect for legacy system integration, process monitoring, and real-time data flows.

2. Intelligent Debouncing

Multiple item changes within a 100ms window are automatically aggregated into a single event, preventing excessive pipeline executions while ensuring you receive the latest values for all changed items.

3. Automatic Subscription Management

Subscriptions are created and cleaned up automatically—no manual subscription management required. The system handles connection lifecycle and resubscription after reconnections.

4. Aggregated Item Data

All item values that changed during the debounce window are provided in a single array, making it easy to process multiple related item changes together.

Debounce Behavior

How Debouncing Works

The OPC DA Trigger uses a 100ms fixed debounce window to aggregate rapid item changes:

- First Change: When the first item value change occurs, a 100ms timer starts

- Subsequent Changes: Any additional item changes within this window update the buffer with the latest values

- Timer Expires: After 100ms, the trigger fires once with an array containing the latest values for all items that changed

- Next Window: The process repeats for the next set of changes

Benefits of Debouncing

| Scenario | Without Debouncing | With Debouncing |

|---|---|---|

| 10 rapid item changes | 10 separate pipeline executions | 1 pipeline execution with all 10 item values |

| Burst updates | Pipeline overload, potential queueing | Smooth processing with aggregated data |

| Related item changes | Process items individually, lose context | Process all related changes together |

The 100ms debounce window is fixed and cannot be configured. This value provides an optimal balance between responsiveness and aggregation for most industrial automation scenarios.

Reconnection Handling

MaestroHub automatically handles connection disruptions to ensure reliable item monitoring.

Automatic Recovery

When an OPC DA connection is lost and restored:

- Connection Lost: The system detects the disconnection automatically

- Connection Restored: Subscriptions are automatically re-established within seconds

- Transparent Recovery: Pipelines continue to receive item changes once the connection is restored—no manual intervention required

What This Means for Your Workflows

| Scenario | Behavior |

|---|---|

| Brief network interruption | Automatic resubscription after reconnection |

| OPC DA server restart | Subscriptions automatically restored when server comes back online |

| MaestroHub restart | All triggers for enabled pipelines are restored on startup |

While the trigger automatically reconnects, item changes that occur during the disconnection period may be missed. For critical data, consider implementing a complementary polling mechanism or configuring appropriate deadband and update rate settings in your OPC DA subscription.

Configuration Options

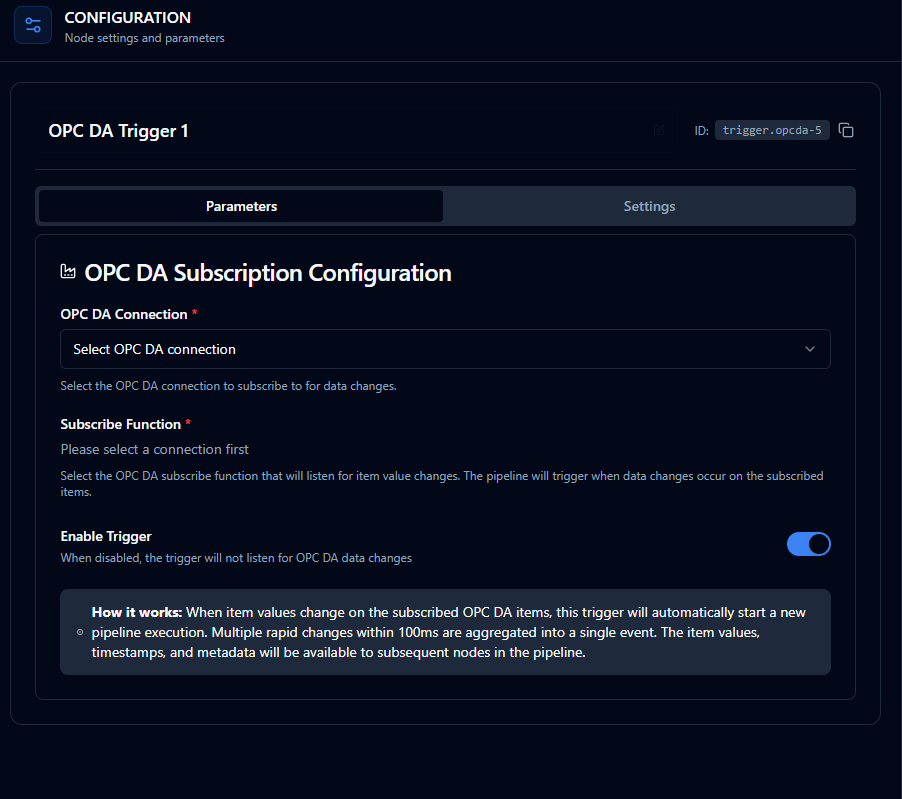

Parameters Configuration

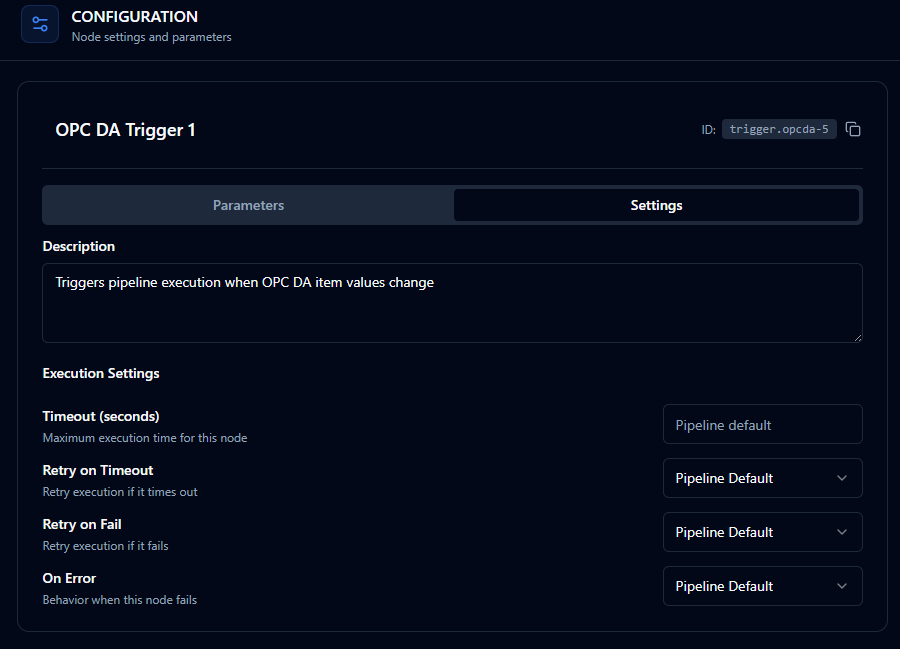

Settings Configuration

Basic Information

| Field | Type | Description |

|---|---|---|

| Node Label | String (Required) | Display name for the node on the pipeline canvas |

| Description | String (Optional) | Explains what this trigger monitors and initiates |

Parameters

| Parameter | Type | Required | Default | Description |

|---|---|---|---|---|

| Connection | Connection ID | Yes | - | The OPC DA connection profile to use for subscribing |

| Function | Function ID | Yes | - | The OPC DA Subscribe function that defines which items to monitor |

| Enabled | Boolean | No | true | When disabled, the subscription is not created even if the pipeline is enabled |

The selected function must be an OPC DA Subscribe function type. Read, write, or browse functions cannot be used with OPC DA Trigger nodes.

Settings

Basic Settings

| Setting | Options | Default | Recommendation |

|---|---|---|---|

| Retry on Fail | true / false | false | Keep disabled for triggers—retries don't apply to event-driven nodes |

| Error Handling | Stop Pipeline / Continue | Stop Pipeline | Use "Stop Pipeline" to halt on critical failures |

Documentation Settings

| Setting | Type | Default | Purpose |

|---|---|---|---|

| Notes | Text | Empty | Document the trigger's purpose, monitored items, or operational context |

| Display Note in Pipeline | Boolean | false | Show notes on the pipeline canvas for quick reference |

Output Data Structure

When OPC DA item changes trigger pipeline execution, the following data is available to downstream nodes via the $input variable.

Output Format

{

"type": "opcda_trigger",

"connectionId": "bf29be94-fc0a-4dc4-8e5c-092f1b74eb4b",

"functionId": "aef374c3-aa2b-454e-aabc-5657faac5950",

"enabled": true,

"node_id": "trigger.opcda-1",

"message": "OPC DA trigger provides configuration for event-based triggering",

"values": [

{

"itemId": "Channel1.Device1.Temperature",

"value": 78.5,

"quality": 192,

"timestamp": 1704067200000

},

{

"itemId": "Channel1.Device1.Pressure",

"value": 145.2,

"quality": 192,

"timestamp": 1704067200050

}

]

}

Accessing Item Data

In downstream nodes, use the $input variable to access the trigger output:

| Field | Expression | Description |

|---|---|---|

| Item Values Array | $input.values | Array of all item values that changed during the debounce window |

| Individual Item | $input.values[0] | Access a specific item by array index |

| Item ID | $input.values[0].itemId | The full OPC DA item identifier |

| Item Value | $input.values[0].value | The current value of the item |

| Item Quality | $input.values[0].quality | Quality code (192 = Good, see OPC DA specification) |

| Item Timestamp | $input.values[0].timestamp | Unix timestamp in milliseconds when the value changed |

| Connection ID | $input.connectionId | The OPC DA connection profile used |

| Function ID | $input.functionId | The Subscribe function that received the changes |

| Node ID | $input.node_id | The trigger node identifier |

| Trigger Type | $input.type | Always opcda_trigger |

Use a JavaScript node or loop to iterate through $input.values and process each item change:

$input.values.forEach(item => {

if (item.quality === 192) { // Good quality

// Process good quality item values

}

});

Validation Rules

The OPC DA Trigger Node enforces these validation requirements:

Parameter Validation

Connection ID

- Must be provided and non-empty

- Must reference a valid OPC DA connection profile

- Error: "OPC DA connection is required"

Function ID

- Must be provided and non-empty

- Must reference a valid OPC DA Subscribe function

- Function must belong to the specified connection

- Error: "Subscribe function is required"

Enabled Flag

- Must be a boolean if provided

- Error: "Enabled must be a boolean value"

Settings Validation

On Error

- Must be one of:

stopPipeline,continueExecution,retryNode - Error: "onError must be a valid error handling option"

Usage Examples

Legacy PLC Monitoring

Scenario: Monitor critical process parameters from legacy PLCs via OPC DA and trigger alerts when values exceed thresholds.

Configuration:

- Label: Legacy PLC Monitor

- Connection: Plant Floor OPC DA Server

- Function: Subscribe to

Channel1.Device1.*(all items on Device 1) - Enabled: true

Downstream Processing:

- Iterate through

$input.valuesarray - Check each item value against configured thresholds

- Generate alerts for out-of-range values

- Log all changes to time-series database

- Update real-time dashboard

Process Control Integration

Scenario: React to setpoint changes in legacy DCS systems and coordinate with modern control systems.

Configuration:

- Label: DCS Setpoint Monitor

- Connection: DCS OPC DA Server

- Function: Subscribe to

DCS.Unit1.Setpoints.*(all setpoints for Unit 1) - Enabled: true

Downstream Processing:

- Extract setpoint name from item ID

- Validate setpoint value ranges

- Update modern PLC via OPC UA or Modbus

- Log setpoint changes for audit trail

- Notify operators of critical changes

Alarm State Tracking

Scenario: Monitor alarm states from legacy SCADA systems and integrate with modern alarm management.

Configuration:

- Label: Legacy Alarm Monitor

- Connection: SCADA OPC DA Server

- Function: Subscribe to

Alarms.*.*(all alarm items) - Enabled: true

Downstream Processing:

- Filter for alarm state changes

- Extract alarm details from item ID

- Map to modern alarm format

- Update centralized alarm database

- Trigger notification workflows

Multi-Item Batch Processing

Scenario: Process related item changes together for batch operations in legacy systems.

Configuration:

- Label: Batch Recipe Monitor

- Connection: Recipe System OPC DA

- Function: Subscribe to

Recipe.Active.*(all active recipe parameters) - Enabled: true

Downstream Processing:

- Receive all recipe parameter changes in single event

- Validate complete recipe configuration

- Update legacy PLC setpoints as a batch

- Log recipe change event

- Confirm successful application

Best Practices

Connection Health Monitoring

- Configure your OPC DA connection with appropriate Keep Alive intervals

- Enable Auto Reconnect on the connection profile for automatic recovery

- Monitor connection status through MaestroHub's connection health dashboard

- Test reconnection behavior during maintenance windows

Designing Subscriptions

| Practice | Rationale |

|---|---|

| Group related items | Leverage debouncing to process related changes together |

| Use specific item paths | Reduces unnecessary processing and improves performance |

| Configure appropriate update rates | Balance responsiveness with server load (typical: 100-1000ms) |

| Set reasonable deadbands | Prevent excessive updates for analog values with minor fluctuations |

| Monitor subscription count | Each subscription consumes server resources |

Working with Debounced Data

Processing the Values Array:

- Always check

$input.values.lengthbefore processing - Validate item quality before using values (192 = Good)

- Handle missing or null values gracefully

- Consider item timestamps when ordering is important

Performance Considerations:

- The debounce window aggregates changes, reducing pipeline executions

- Process all items in the array efficiently to maintain throughput

- Avoid heavy processing in tight loops over large item arrays

Error Handling Strategies

For Critical Monitoring:

- Set On Error to

stopPipeline - Implement alerting for stopped pipelines

- Monitor pipeline execution failures

- Consider backup monitoring mechanisms

For Best-Effort Processing:

- Set On Error to

continueExecution - Log failed events for later analysis

- Ensure downstream nodes handle partial failures gracefully

- Implement retry logic for critical operations

Item Quality Handling

Always check item quality before processing values:

$input.values.forEach(item => {

// OPC DA Quality Codes

if (item.quality === 192) {

// Good quality - process data

} else if ((item.quality & 0xC0) === 0x00) {

// Bad quality - log error, skip processing

} else if ((item.quality & 0xC0) === 0x40) {

// Uncertain quality - decide based on use case

}

});

Common OPC DA Quality Codes:

192(0xC0): Good0(0x00): Bad64(0x40): Uncertain

Enable vs. Disable

- Use the trigger's Enabled parameter to temporarily pause monitoring without changing pipeline state

- Disable triggers during maintenance windows to prevent unnecessary processing

- Document the reason for disabled triggers in the node's Notes field

- Re-enable triggers after testing configuration changes

Performance Optimization

| Scenario | Recommendation |

|---|---|

| High-frequency items | Configure appropriate update rates and deadbands in subscription |

| Large item counts | Monitor pipeline execution queue; optimize downstream processing |

| Multiple triggers on same items | MaestroHub optimizes with shared subscriptions—no action needed |

| Burst updates | Debouncing handles this automatically; ensure downstream can process arrays efficiently |

Legacy System Considerations

OPC DA Specific Challenges:

- DCOM Configuration: Ensure proper DCOM permissions and firewall rules

- Server Stability: Legacy OPC DA servers may have limited connection capacity

- Quality Codes: Familiarize yourself with OPC DA quality code structure

- Item Naming: Legacy systems may have inconsistent or cryptic item names

- Time Synchronization: Ensure server and client clocks are synchronized

Troubleshooting

Common Issues

No Pipeline Executions

- Verify the trigger is enabled

- Check that the pipeline is enabled

- Confirm the OPC DA connection is active

- Verify items are actually changing values

- Check subscription function configuration

- Validate DCOM permissions and firewall rules

Missing Item Changes

- Connection was down during changes (expected behavior)

- Item quality may be Bad (filtered by subscription)

- Subscription function may not include the item path

- Check OPC DA server subscription status

- Verify update rate and deadband settings

Too Many Pipeline Executions

- Debouncing should prevent this; verify 100ms window is working

- Check if multiple triggers are configured for same items

- Review item update rates in subscription configuration

- Consider increasing deadband for analog values

Empty Values Array

- Subscription may have no matching items

- All item changes may have Bad quality

- Connection issue during debounce window

- Check OPC DA server item availability

Connection Issues

- Verify DCOM configuration on both client and server

- Check Windows firewall rules

- Ensure OPC DA server is running and accessible

- Validate credentials and permissions

- Review OPC DA server logs for connection errors