PostgreSQL Integration Guide

PostgreSQL Integration Guide

Connect to PostgreSQL databases to read and write industrial data in your pipelines. This comprehensive guide walks you through everything from basic setup to advanced configurations.

Overview

The PostgreSQL connector is your gateway to relational database operations in MaestroHub. It enables you to:

- Read data from tables, views, or custom queries

- Write data with insert, update, or delete operations

- Use parameterized queries for dynamic, reusable data operations

- Secure connections with SSL/TLS encryption

The PostgreSQL connector also supports TimescaleDB connections for time-series data.

Connection Configuration

Creating a PostgreSQL Connection

Navigate to Connections → New Connection → PostgreSQL and configure the following:

PostgreSQL Connection Creation Fields

1. Profile Information

| Field | Default | Description |

|---|---|---|

| Profile Name | - | A descriptive name for this connection profile (required, max 100 characters) |

| Description | - | Optional description for this PostgreSQL connection |

2. Database Configuration

| Field | Default | Description |

|---|---|---|

| Host | localhost | PostgreSQL server hostname or IP address - required |

| Port | 5432 | PostgreSQL server port (1-65535) - required |

| Connect Timeout (sec) | 30 | Maximum time to wait for connection establishment (0-600 seconds) - required |

| Schema | public | Database schema to use - required |

| Database | - | Database name to connect to (e.g., mydb) - required |

Note: Supported PostgreSQL versions: 9.6+

3. Basic Authentication

| Field | Default | Description |

|---|---|---|

| Username | - | PostgreSQL database user (required) |

| Password | - | PostgreSQL user password (required) |

4. SSL Settings

4a. SSL Configuration

| Field | Default | Description |

|---|---|---|

| Enable SSL | true | Use encrypted connection to PostgreSQL server |

4b. SSL Mode and Certificates

(Only displayed when SSL is enabled)

| Field | Default | Description |

|---|---|---|

| SSL Mode | require | TLS/SSL connection mode used by the driver (require / verify-ca / verify-full) |

| CA Certificate | - | Trusted CA certificate (sslrootcert) in PEM format |

| Client Certificate | - | Optional client certificate (sslcert) in PEM format |

| Private Key | - | Private key for client certificate (sslkey) in PEM format |

SSL Mode Options

require: Requires SSL connection but does not verify server certificateverify-ca: Requires SSL and verifies that the server certificate is issued by a trusted CAverify-full: Requires SSL and verifies both the CA and that the server hostname matches the certificate

5. Connection Pool Settings

| Field | Default | Description |

|---|---|---|

| Max Open Connections | 100 | Maximum number of simultaneous database connections (0-1000). Higher values increase concurrency but add DB load |

| Max Idle Connections | 25 | Idle connections kept ready for reuse to reduce latency (0-1000, 0 = close idle connections immediately) |

| Connection Max Lifetime (sec) | 900 | Maximum age of a connection before it is recycled (0-86400 seconds, 0 = keep connections indefinitely). Helpful to avoid server-side timeouts |

| Connection Max Idle Time (sec) | 300 | How long an idle connection may remain unused before being closed (0-86400 seconds, 0 = disable idle timeout) |

Connection pool settings help optimize database performance by balancing concurrency, resource usage, and latency across workloads.

6. Retry Configuration

| Field | Default | Description |

|---|---|---|

| Retries | 3 | Number of connection attempts (0-10, 0 = no retry) |

| Retry Delay (ms) | 100 | Delay between retry attempts in milliseconds (0-3600000 ms) |

| Retry Backoff Multiplier | 2 | Exponential factor for retry delay growth (1-10, e.g., 2.0 means each retry waits twice as long) |

Example Retry Behavior

- With

Retry Delay = 100msandRetry Backoff Multiplier = 2:- 1st retry: wait 100ms

- 2nd retry: wait 200ms

- 3rd retry: wait 400ms

7. Advanced Features

| Field | Default | Description |

|---|---|---|

| Use TimescaleDB | false | Enable TimescaleDB-specific optimizations (no DSN change required) |

8. Connection Labels

| Field | Default | Description |

|---|---|---|

| Labels | - | Key-value pairs to categorize and organize this PostgreSQL connection (max 10 labels) |

Example Labels

env: prod– Environmentteam: data– Responsible team

- Required Fields: All fields described as "required" must be filled.

- SSL/TLS: When SSL is disabled, SSL Mode is automatically set to disable.

- Connection Pooling: Manages concurrency with

Max Open Connections, reduces latency withMax Idle Connections, mitigates stale connections viaConnection Max Lifetime, and frees resources usingConnection Max Idle Time. - Retry Logic: Implements exponential backoff—initial connection attempts use the base delay and each retry multiplies the delay by the backoff factor; ideal for transient network issues or database restarts.

- TimescaleDB: When enabled, provides optimizations for time-series workloads.

- Timeout Values:

Connect Timeoutapplies to initial connection establishment; all timeout values are in seconds unless noted (Retry Delay uses milliseconds). - Security Best Practices: Always enable SSL for production, prefer

verify-full, supply CA certificates forverify-caorverify-full, and include client certificates for mutual TLS when possible.

Function Builder

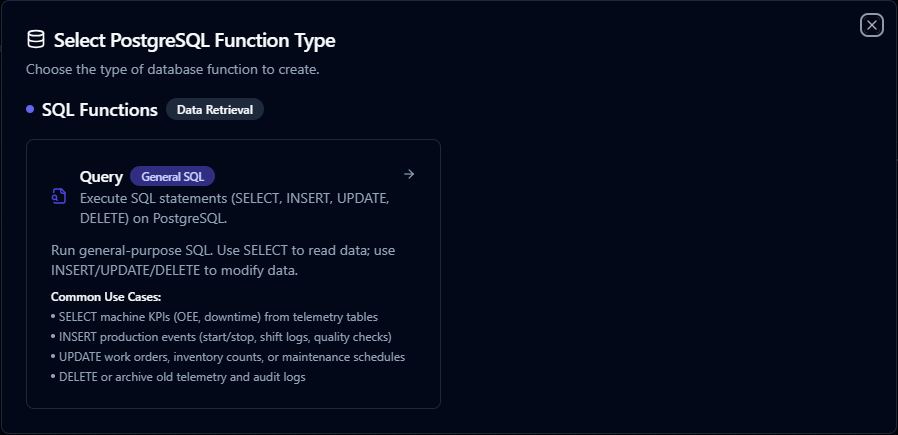

Creating PostgreSQL Functions

Once you have a connection established, you can create reusable query functions:

- Navigate to Functions → New Function

- Select PostgreSQL Query as the function type

- Choose your PostgreSQL connection

- Write your SQL query with parameters

PostgreSQL query function creation interface with SQL editor and parameter configuration

Query Function

Purpose: Execute SQL statements (SELECT, INSERT, UPDATE, DELETE) on PostgreSQL. Run general-purpose SQL to read or modify data in your PostgreSQL database.

Configuration Fields

| Field | Type | Required | Default | Description |

|---|---|---|---|---|

| SQL Query | String | Yes | - | SQL statement to execute (SELECT or INSERT/UPDATE/DELETE). Supports parameterized queries. |

| Timeout (seconds) | Number | No | 0 | Per-execution timeout in seconds. Sets maximum time allowed for query execution. |

Use Cases:

- SELECT machine KPIs (OEE, downtime) from telemetry tables

- INSERT production events (start/stop, shift logs, quality checks)

- UPDATE work orders, inventory counts, or maintenance schedules

- DELETE or archive old telemetry and audit logs

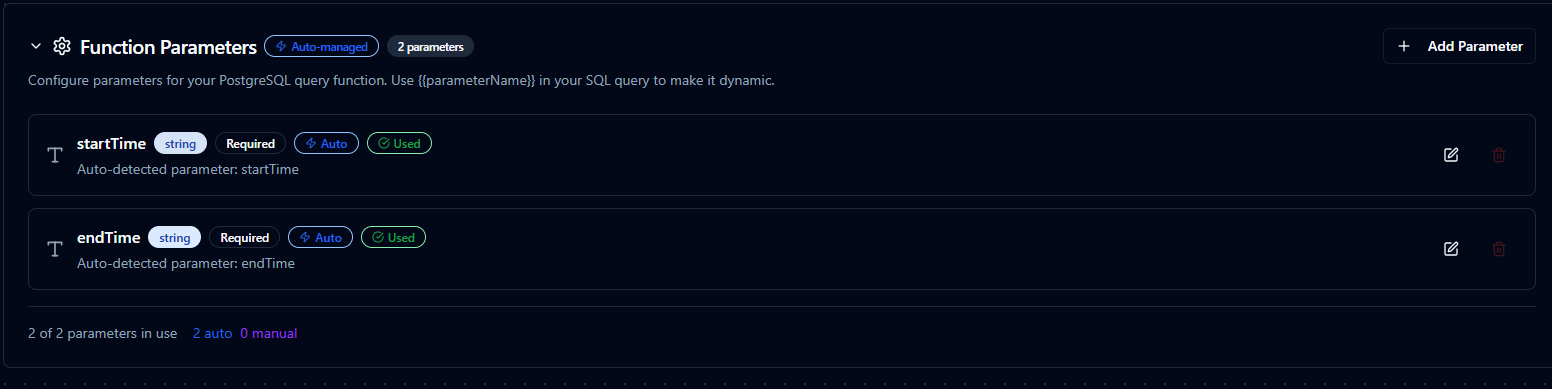

Using Parameters

The ((parameterName)) syntax creates dynamic, reusable queries. Parameters are automatically detected and can be configured with:

| Configuration | Description | Example |

|---|---|---|

| Type | Data type validation | string, number, boolean, date, array |

| Required | Make parameters mandatory or optional | Required / Optional |

| Default Value | Fallback value if not provided | NOW(), 0, active |

| Description | Help text for users | "Start date for the report" |

Parameter configuration interface showing type validation, required flags, default values, and descriptions

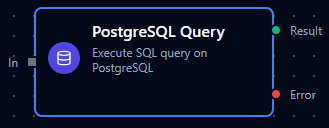

Pipeline Integration

Use the PostgreSQL query functions you create here as nodes inside the Pipeline Designer to move data in and out of your database alongside the rest of your operations stack. Drag the query node onto the canvas, bind its parameters to upstream outputs or constants, and configure connection-level options without leaving the designer.

For end-to-end orchestration ideas, such as combining database reads with MQTT, REST, or analytics steps, explore the Connector Nodes page to see how SQL nodes complement other automation patterns.

PostgreSQL query node in the pipeline designer with connection, function, and parameter configuration

Common Use Cases

Reading Data for Analysis

Scenario: Generate hourly production reports with efficiency metrics.

SELECT

DATE_TRUNC('hour', timestamp) as hour,

machine_id,

COUNT(*) as event_count,

AVG(efficiency) as avg_efficiency,

MIN(efficiency) as min_efficiency,

MAX(efficiency) as max_efficiency

FROM production_events

WHERE timestamp >= ((startDate)) AND timestamp < ((endDate))

GROUP BY hour, machine_id

ORDER BY hour DESC;

Pipeline Integration: Use in a pipeline to feed data to visualization dashboards, BI tools, or reporting nodes.

Writing Sensor Data

Scenario: Log real-time sensor readings from industrial IoT devices.

INSERT INTO sensor_readings (sensor_id, temperature, pressure, vibration, timestamp)

VALUES (((sensorId)), ((temperature)), ((pressure)), ((vibration)), NOW())

ON CONFLICT (sensor_id, timestamp)

DO UPDATE SET

temperature = EXCLUDED.temperature,

pressure = EXCLUDED.pressure,

vibration = EXCLUDED.vibration;

Pipeline Integration: Connect this function after data collection nodes (MQTT, OPC UA, Modbus, etc.) in your pipeline.

Updating Work Order Status

Scenario: Track manufacturing progress by updating work order status in real-time.

UPDATE work_orders

SET

status = ((newStatus)),

completed_units = ((completedUnits)),

completion_percentage = ROUND((((completedUnits))::numeric / total_units * 100), 2),

updated_at = NOW(),

updated_by = ((userId))

WHERE order_id = ((orderId))

RETURNING order_id, status, completion_percentage;

Pipeline Integration: Trigger this function based on production events, barcode scans, or manual workflows.

Data Retention and Cleanup

Scenario: Maintain database performance by archiving or deleting old data.

-- Archive old data before deleting

WITH archived AS (

INSERT INTO sensor_readings_archive

SELECT * FROM sensor_readings

WHERE timestamp < NOW() - INTERVAL '((retentionDays)) days'

AND archived = false

RETURNING id

)

DELETE FROM sensor_readings

WHERE id IN (SELECT id FROM archived);

Pipeline Integration: Schedule this function to run daily or weekly via pipeline triggers (cron jobs).