Pipelines

Overview

A Pipeline in MaestroHub is a visual workflow that automates data processing and business logic. Pipelines combine nodes (building blocks) to create sophisticated automation without writing code. Each pipeline can be triggered manually or on a schedule, and includes built-in execution monitoring and debugging capabilities.

Pipelines transform complex automation tasks into visual workflows that anyone can understand and maintain. With drag-and-drop design, real-time monitoring, and enterprise-grade reliability, pipelines accelerate your digital transformation initiatives.

Navigation Path: Orchestrate > Pipelines

Key Capabilities

Pipelines empower you to:

- Build visual workflows with drag-and-drop designer using pre-built nodes across multiple categories

- Execute in parallel with level-based or sequential-branch execution strategies

- Handle errors gracefully with retry policies, fallback options, and continue-on-error logic

- Monitor in real-time with live execution tracking, performance metrics, and bottleneck identification

- Debug efficiently with node-by-node breakdown, data flow visualization, and error stack traces

- Control concurrency with queue, restart, or skip overlap modes for intelligent execution management

- Track changes with automatic version control and full rollback capability

- Organize workflows with labels, descriptions, and metadata for easy categorization

Core Components

Creating Your First Pipeline

Essential configuration for creating a new pipeline:

| Field | Required | Description |

|---|---|---|

| Name | Yes | Unique identifier for your pipeline (min. 2 characters). Use descriptive, action-oriented names like customer-onboarding-automation or iot-sensor-data-processing |

| Description | No | Document your workflow's purpose, input/output expectations, and important operational notes |

| Labels | No | Key-value pairs for categorization and filtering (e.g., environment:production, team:data-engineering, owner:mike.jones). Useful for organization and governance |

| Execution Strategy | Yes | Level-Based: Maximum parallelization (recommended) or Sequential-Branch: Controlled parallel execution |

| Overlap Mode | Yes | Queue: Sequential processing (data integrity priority), Restart: Latest execution wins (real-time scenarios), or Skip: Prevent overlaps (scheduled maintenance tasks) |

- Use descriptive, action-oriented names for pipelines

- Use standard label keys across your organization:

environment,team,owner,priority - Start with Level-Based execution strategy for most use cases

- Choose overlap mode based on your use case: Queue for data pipelines, Restart for real-time, Skip for scheduled tasks

After creating your pipeline, the application automatically redirects you to the Pipeline Designer page for that specific pipeline. This is where you'll build your workflow by adding nodes, configuring connections, and defining your automation logic.

Pipeline Designer

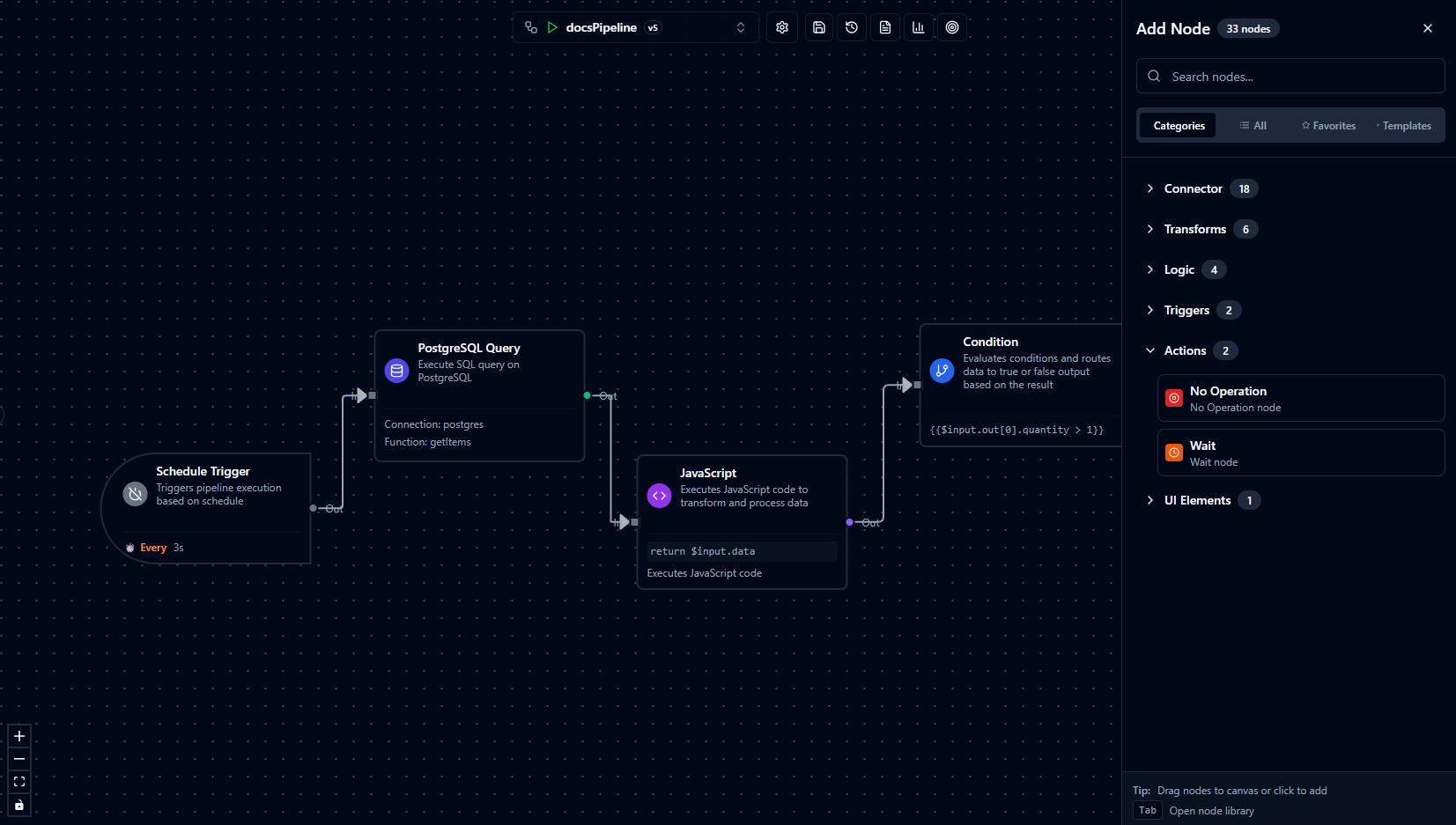

Visual workflow designer interface showing drag-and-drop canvas with nodes and connections

Transform complex business processes into elegant, visual workflows with our intuitive designer:

- Pre-built Nodes across multiple categories (Triggers, Actions, Logic, Transforms, and more)

- Visual Connection System for crystal-clear data flow visualization

- Real-time Validation catches errors before execution

- Smart Auto-layout organizes complex pipelines automatically

- Flexible Error Handling with retry policies and fallback options

- Version Control tracks every change with full rollback capability

- Canvas Operations including zoom, pan, and multi-select for efficient workflow design

Node Types

MaestroHub provides comprehensive node categories for building sophisticated workflows. Nodes are organized into categories based on their functionality, making it easy to find the right building block for your workflow.

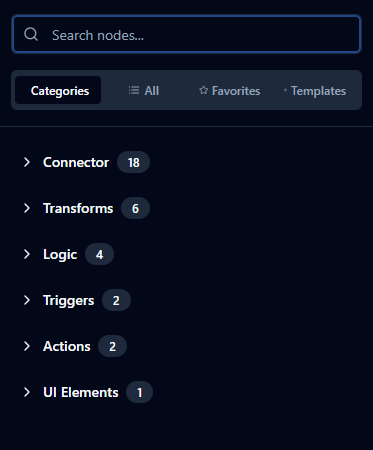

Node panel showing available node categories and nodes - the selection grows continuously with new additions

Example Categories:

- Trigger Nodes: Start your workflows (e.g., Manual, Scheduler)

- Action Nodes: Perform work and operations (e.g., API calls, data processing, notifications)

- Logic Nodes: Make decisions and control flow (e.g., conditions, loops, branches)

- Transform Nodes: Manipulate and transform data (e.g., mapping, filtering, aggregation)

- Connector Nodes: Integrate with external systems via protocols (e.g., MQTT, Modbus, OPC UA)

The Pipeline Designer displays all available node categories and nodes in the node panel. The number of available nodes continuously grows as new integrations and capabilities are added to MaestroHub. Simply drag and drop nodes onto the canvas to build your workflow. For detailed documentation on specific node types, see their respective pages.

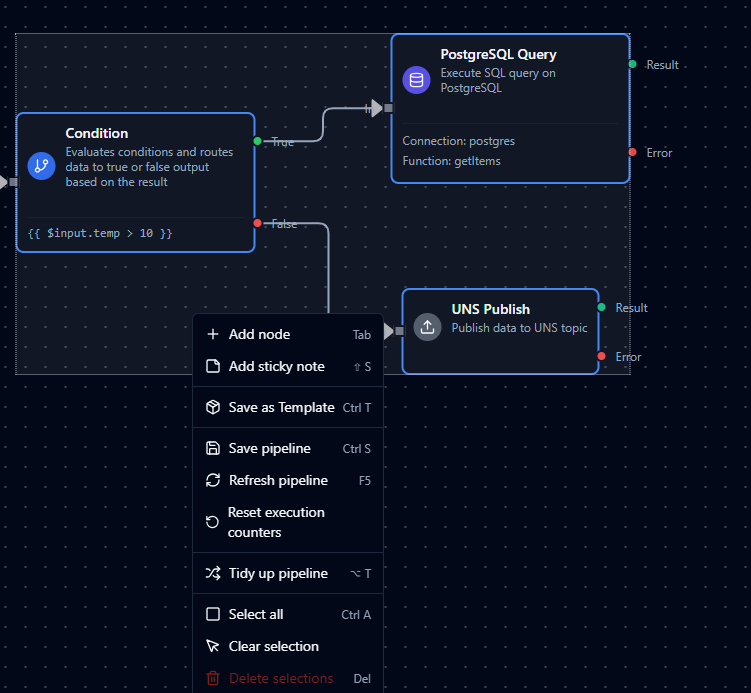

Templates

Templates let you save a selected group of nodes and their internal connections as a reusable building block that can be dropped into any pipeline. This is ideal for common sub-flows (e.g., notification handlers, error-handling patterns, multi-step integrations) that you want to standardize and reuse across teams.

- Where to find: In the Pipeline Designer, open the Add Node panel and switch to the Templates tab to browse available templates. From there, you can drag a template onto the canvas just like any other node group.

- How to create: On the canvas, select the nodes you want to reuse together (typically 2+ nodes with their connections) and save them as a template using the Save as Template action in the designer (via context menu, toolbar button, or shortcut, depending on your configuration).

Templates in the Pipeline Designer - create once, reuse across pipelines

- Why use templates:

- Accelerate design by reusing proven patterns instead of rebuilding the same logic in multiple pipelines.

- Enforce best practices by sharing curated, workspace-wide templates (with optional visibility scopes such as private, workspace, or public).

- Keep flexibility by allowing template instances to expose configurable settings while still keeping the core flow consistent.

Node Configuration

Each node follows a standardized configuration structure with Basic Information, Parameters, and Settings tabs. When you select a node on the canvas, the configuration panel opens on the right side.

For detailed information about node configuration structure, error handling strategies, and best practices, see the Nodes documentation.

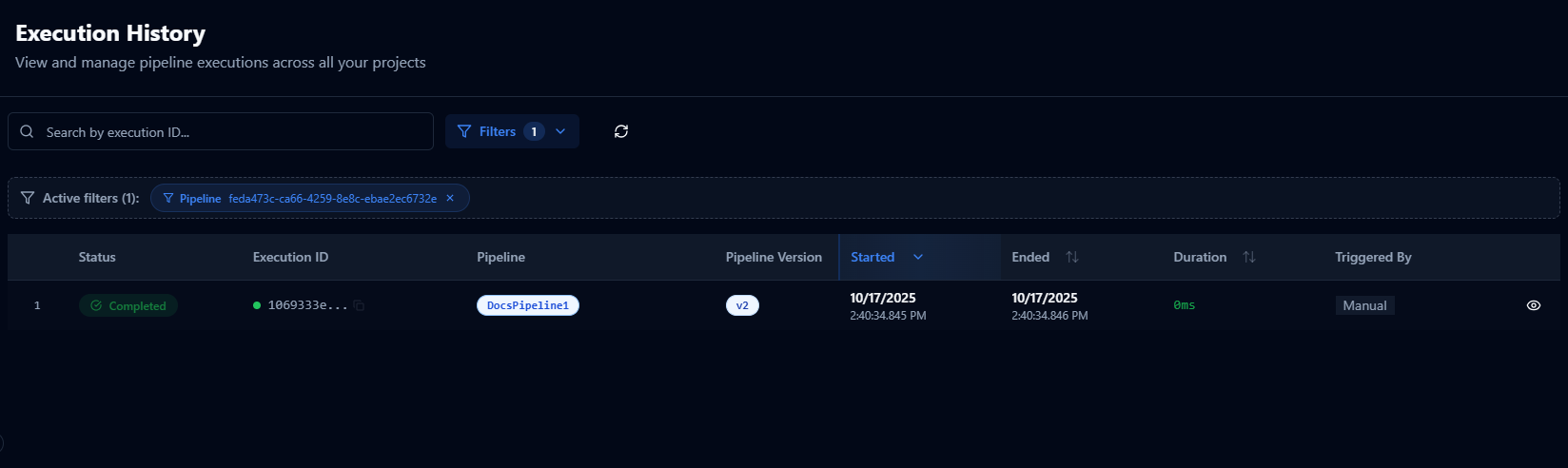

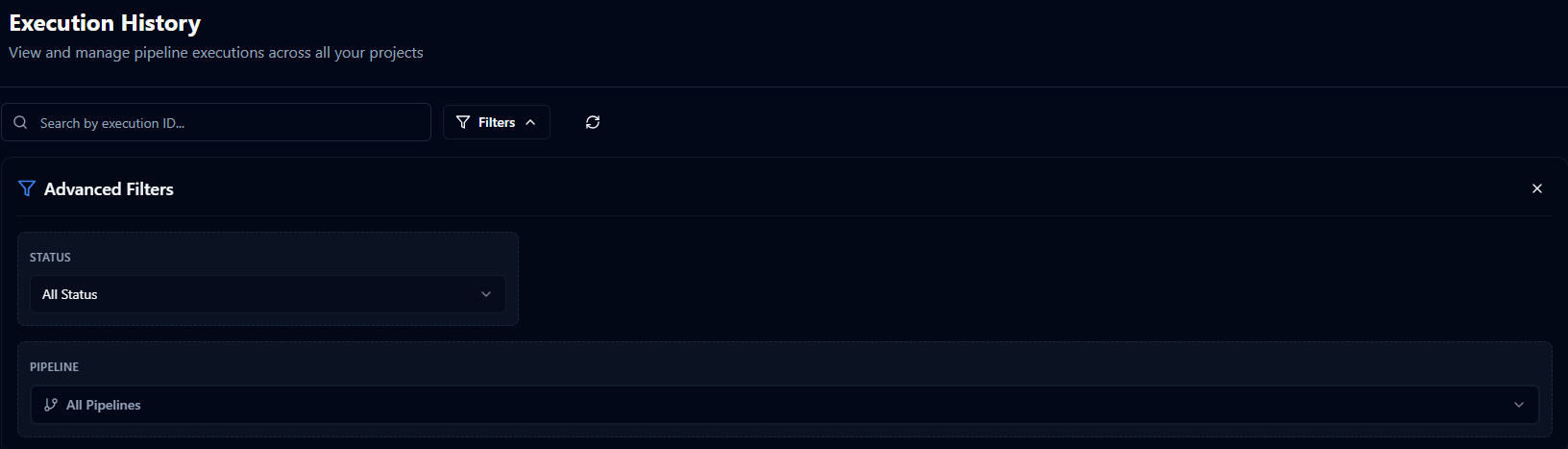

Execution History

The Execution History provides a comprehensive view of all pipeline runs, enabling you to monitor performance, troubleshoot issues, and analyze workflow behavior over time. Access execution history from the Pipeline Designer or view consolidated executions across all pipelines.

Access Points:

- From Pipeline Designer: Select the "Execution History" tab

- Direct URL with filters: Use shareable links with pre-applied filters

- All Executions View: Monitor runs across multiple pipelines

Execution history view showing pipeline runs with status indicators, timing information, and action buttons

Execution Status Lifecycle

Every execution progresses through a defined lifecycle. Understanding these states helps you monitor and manage your workflows effectively:

| Status | Description | Business Impact | Available Actions |

|---|---|---|---|

| Pending | Execution is queued and awaiting processing | Normal queue time; monitor if delays occur | Cancel if no longer needed |

| Running | Pipeline is actively executing | Real-time processing; monitor progress | View live progress, cancel if necessary |

| Completed | All nodes executed successfully | Workflow finished as designed | Review results, analyze performance |

| Failed | One or more nodes encountered errors | Requires attention and troubleshooting | View error details, rerun with fixes |

| Cancelled | Manually stopped by user | Intentional termination | Review partial results if needed |

| Partial | Some nodes succeeded, others failed | Mixed outcome requiring review | Identify failed nodes, address issues |

Executions use intuitive color coding: green for success, red for failure, blue for in-progress, and yellow for attention-needed states. Visual indicators help you quickly assess workflow health at a glance.

Execution Information

Each execution record captures essential information for monitoring and analysis:

Identity & Version

- Execution ID: Unique identifier with one-click copy functionality

- Pipeline Version: Tracks which pipeline version was executed, crucial for debugging after updates

Timing & Performance

- Start Time: When execution began (date and time with millisecond precision)

- End Time: When execution completed or was terminated

- Duration: Total execution time, color-coded to highlight long-running workflows (durations over 1 minute appear in orange)

Trigger Information

- Manual: User-initiated from the interface

- Schedule: Automated runs via cron or interval schedules

- Webhook: External system triggers - Coming Soon

- Event: Event-driven execution from system events - Coming Soon

Filtering & Search Capabilities

Find specific executions quickly using comprehensive filtering options:

Filter and search controls for execution history including status filter and execution ID search

Status Filter Filter executions by their current state (All, Pending, Running, Completed, Failed, Cancelled, Partial). Use this to focus on workflows needing attention or to analyze success patterns.

Execution ID Search Search by execution ID using partial matching. Useful when tracking specific runs referenced in logs or shared by team members.

Sorting Options Sort executions by:

- Start Time (default): Most recent runs appear first

- End Time: View completion order

- Duration: Identify slow-running workflows for optimization

URL-Based Sharing All filter and sort states persist in the URL, enabling you to:

- Share specific filtered views with team members

- Bookmark commonly used filter combinations

- Create direct links in documentation or tickets

Available Actions

Take action on executions based on their status:

View Details (All Statuses)

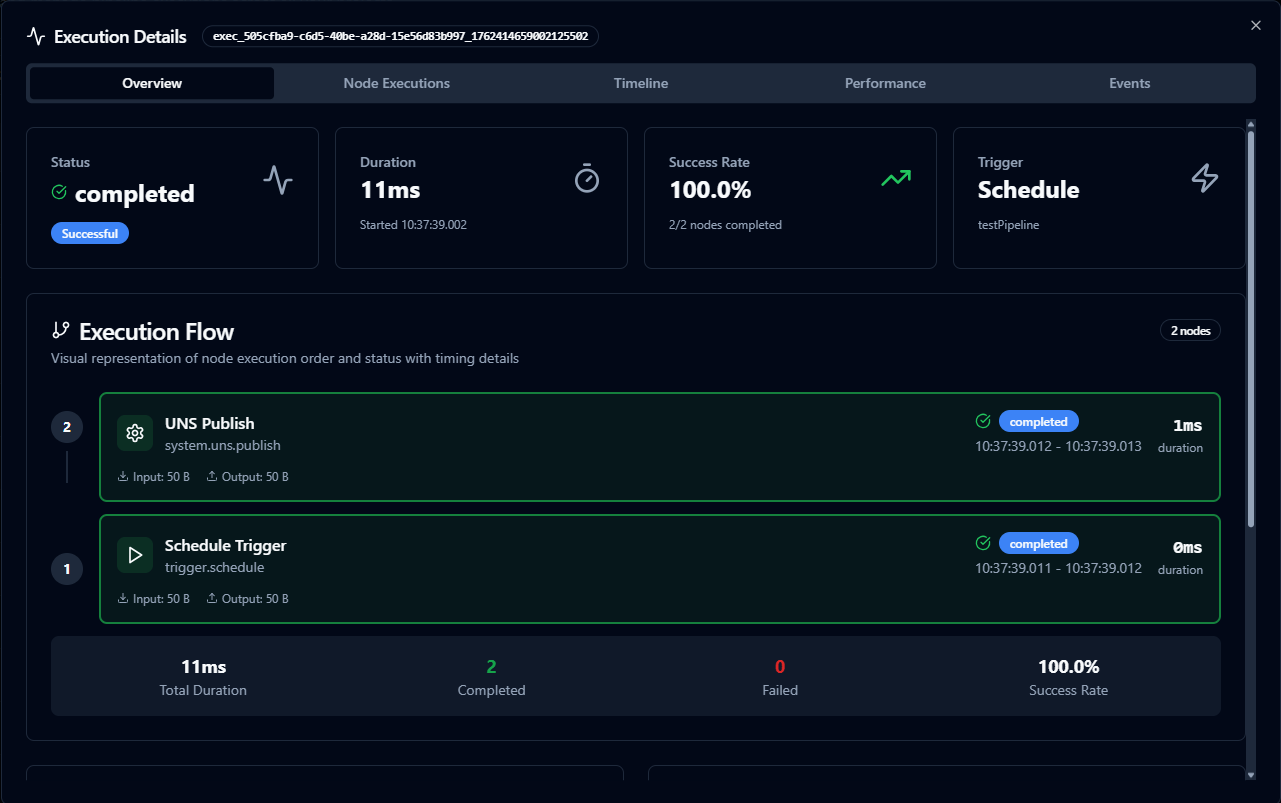

Detailed execution view with tabbed interface showing execution overview, node details, timeline, performance metrics, and events

Detailed logs for executions older than 2 hours are removed automatically after the retention period. This helps keep your workspace fast and responsive. You can still access high-level information for older executions, including execution status, duration, and basic execution metadata.

Opens a comprehensive tabbed interface for deep execution analysis:

Overview Tab

- Execution summary with key metrics (status, duration, trigger type)

- Visual execution flow showing node order and status

- Performance insights highlighting bottlenecks

- Error summary for failed executions

- Quick navigation to specific analysis tabs

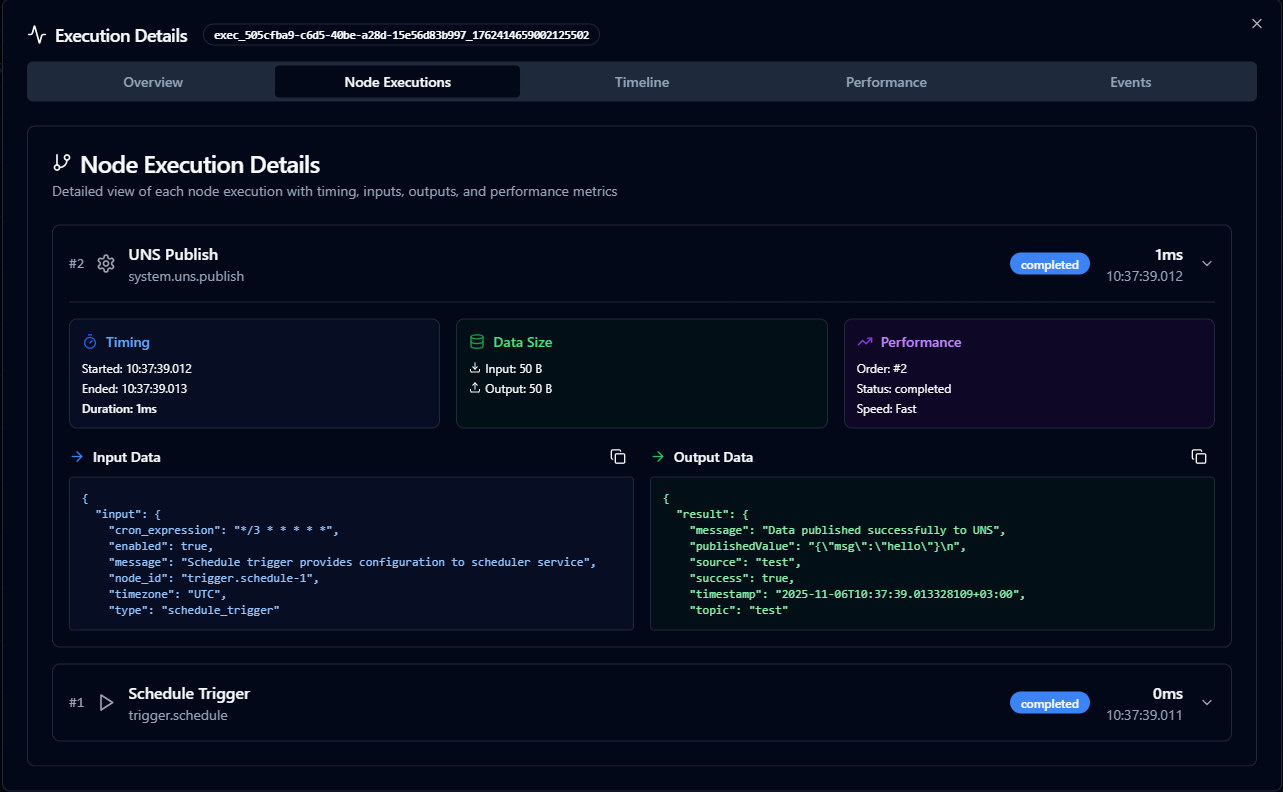

Node Executions Tab

- Expandable node-by-node breakdown

- Input and output data for each node

- Timing information (start time, end time, duration)

- Data size metrics (input/output bytes)

- Error messages for failed nodes

- One-click copy functionality for data inspection

Timeline Tab

- Chronological event stream with filtering

- Event type breakdown (pipeline start/complete, node start/complete/failed)

- Relative time tracking from execution start

- Detailed event metadata and data payloads

- Visual timeline with color-coded event types

Performance Tab

- Node-level performance metrics

- Execution time breakdown per node

- Data throughput analysis (input/output sizes)

- Performance comparison across nodes

- Status tracking with timing details

Events Tab

- Raw event log with full event data

- System-level execution events

- Detailed timestamps and event payloads

- Technical debugging information

Rerun Execution (Failed Status Only) Restart a failed pipeline with the same input data. Useful for:

- Transient failures (network issues, temporary service unavailability)

- After fixing configuration or data issues

- Testing fixes without manually recreating inputs

Cancel Execution (Running Status Only) Gracefully stop a running execution. The system will:

- Complete currently executing nodes when possible

- Mark the execution as cancelled

- Preserve results from completed nodes

- Stop queued nodes from executing

Rerunning an execution creates a new execution instance with the same inputs. The original failed execution is preserved for audit purposes. Always verify the root cause before rerunning to avoid repeated failures.

Performance Analysis

Execution history provides valuable insights for optimization:

Node-level execution details showing timing, input/output data, and performance metrics for individual pipeline steps

Duration Tracking Monitor execution times to identify:

- Performance degradation over time

- Unusually slow runs requiring investigation

- Optimization opportunities across executions

Node-Level Analysis Use the Node Executions and Performance tabs to analyze:

- Which nodes consume the most time (bottlenecks)

- Data throughput per node (input/output sizes)

- Parallelization effectiveness

- Node execution order and dependencies

- Retry frequency and patterns

Timeline Analysis The Timeline tab enables you to:

- Track event sequences and timing

- Identify delays between node executions

- Filter events by type for focused analysis

- Measure relative timing from execution start

Success Rate Monitoring Track pipeline reliability:

- Completed vs failed execution ratios

- Common failure patterns and error nodes

- Time-of-day or load-related issues

Real-Time Updates

Running executions automatically refresh every 5 seconds, providing:

- Live status updates without manual refresh

- Current node execution progress

- Up-to-date duration calculations

- Immediate notification when execution completes or fails

Best Practices

Pipeline Design Excellence

Start Simple, Scale Smart

- Begin with Manual Trigger for testing

- Build and test incrementally

- Add scheduled triggers after thorough testing

- Use descriptive, action-oriented names

Documentation

- Add Sticky Notes for complex logic

- Use node descriptions

- Document assumptions and requirements

- Include contact information

Organization

- Use labels consistently

- Establish naming conventions

- Group related pipelines

- Track ownership and environment

Error Handling

- Configure retry policies for external integrations

- Use "Continue on Error" for non-critical operations

- Add condition nodes for data validation

- Plan for partial failures

Performance Optimization

Maximize Parallelization

- Use level-based execution strategy

- Identify independent operations

- Avoid unnecessary sequential dependencies

- Monitor parallelization opportunities

Optimize Data Transfer

- Pass only required fields between nodes

- Use transformation nodes to reduce data size

- Monitor data flow metrics

Batch Processing

- Use Buffer nodes for bulk operations

- Configure appropriate batch sizes

- Balance latency vs. throughput

Strategic Conditioning

- Place filter conditions early in pipeline

- Avoid expensive operations on filtered data

- Use condition nodes efficiently

The MaestroHub Advantage: Visual simplicity meets enterprise power for intelligent workflow automation.